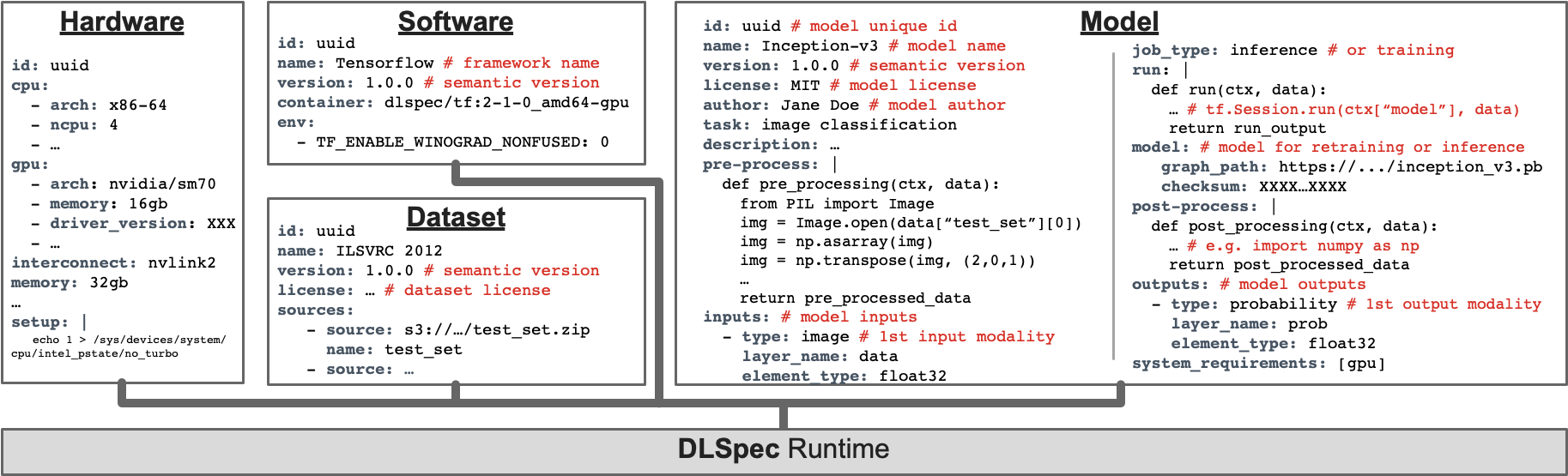

Program- and human-readable To make it possible to develop a runtime that executes DLSpec, the specification should be readable by a program. To allow a user to understand what the task does and repurpose it (e.g. use a different HW/SW stack), the specification should be easy to introspect.

DLSpec

A Deep Learning Artifact Exchange Specification